Auto-Transpose Data

- Matt Zaske

- March 18, 2019

- 4 minutes

Many years ago, an individual in our office retired and the position was not replaced. For several reasons, this was an appropriate decision: the landscape of IT and our localized service portfolio had, for lack of a better phrase, stabilized. Our team was more often innovating in ways involving or prioritizing partnerships over custom builds or infrastructure requirements. We were appropriately consuming centralized services made available as a commodity.

Ultimately, however, some of the retiring individual's duties needed to be absorbed or handled by attrition. I ended up taking on a number of these duties, many of which centered around data procurement and (sometimes) transport.

Ugh, Lists of IDs

Early on in this New World Order, I would receive seemingly "random" one-off requests for institutional data. Most often, they would come in the form of:

I have a list of {identifiers}. Can you pull {related data} for these and send them back to me when you have a chance?

Almost always, the data was a single-column Excel sheet or CSV, like this:

ID

5016

7315

5809

5920

6076

1491

3317

2148

3686

9519

I'd strip out the header (if applicable), and manually transform the list (often by doing some find/replace magic...or just doing it by hand):

'5016','

7315','

5809','

5920','

6076','

1491','

3317','

2148','

3686','

9519'

Then:

'5016','7315','5809','5920','6076','1491','3317','2148','3686','9519'

This would ultimately wind up in an IN statement suitable for the underlying SQL query:

SELECT Things

FROM Data

WHERE ID IN('5016','7315','5809','5920','6076','1491','3317','2148','3686','9519')

Of course, most of the time these one-offs would be simple datasets. 20, 50, 200 records. Not awesome, but never really crossed my threshold of pain.

Until I started receiving larger dataset requests. Sometimes 300, 500, 700, upward of 1,000. And then something had to be done...in part because the underlying Oracle environment limits IN statements to 999 items, which meant breaking up and bolting together requests. We'll talk about query optimization another time, though.

The Problem

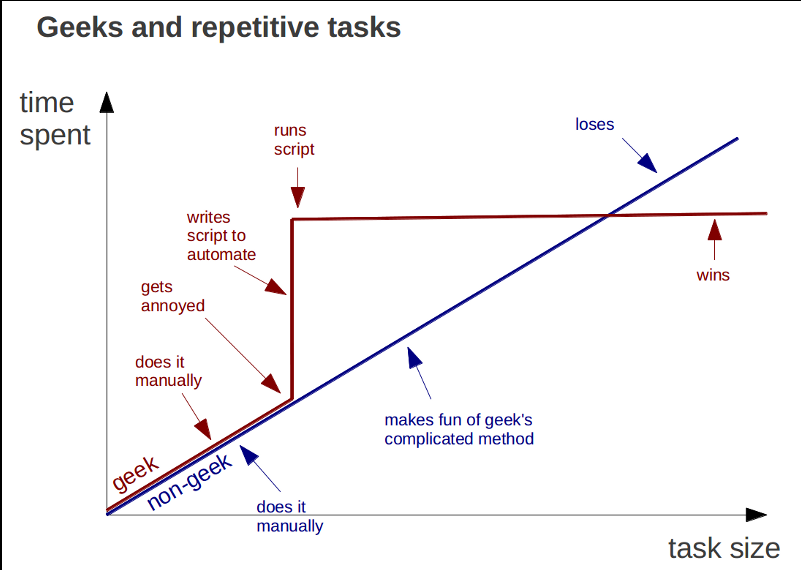

While it's no big deal to handle a couple dozen records, the risk of error on datasets more than about 30 records starts to grow exponentially. Not to mention the super time sink and totally menial task at hand. It's annoying, and I can be doing better things. Must find a way to automate this transposition.

The Simple Solution

My enterprise data procurement environment is Linux-based. Since I have PHP at the ready in that environment, and since PHP is totally my comfort language, I whipped together a simple script to auto-transpose the single-column data into my SQL statement:

<?php

// Generate IN Statement

$statementText = 'IN (';

if (($handle = fopen($inFilePath, "r")) !== FALSE) {

while (($inputData = fgetcsv($handle, 50, ",")) !== FALSE) {

$statementText .= "'$inputData[0]',";

}

fclose($handle);

}

$statementText = rtrim($statementText, ',') . ')';

// Output File

$fp = fopen($outFilePath, "wb");

fwrite($fp, $statementText);

fclose($fp);

?>

The code above is written not to parse anything but the first 50 characters of each line. No more, no less. Proceed with caution, because it was written for a specific, single purpose in mind. The concept is straightforward, though, and easily extensible for a given situation.

In my daily/regular dev environment (on a Windows box), I store the input/output files on a network drive (which is also available on the Linux box), and then use a simple batch script to "run" the PHP remotely (thanks to key auth). Admittedly, I could just have PHP installed on my local box, but I'm often working in many different environments/machines with varying configurations (most without PHP installed) so it's just as easy to run it remotely and leverage the network drive.

I've got the basic script in GitHub; steal/edit/inspire away!